Satellite Embeddings and Large Scale Model

A few months ago, the remote sensing world was shaken by one of Google DeepMind’s most ambitious Earth observation projects: the official release of Google Satellite Embeddings. Just hours after the announcement, I dove into the white paper and tested the data myself, and I was genuinely blown away. I was also lucky enough to get a seat in the Geo for Good 2025 Summit in Singapore, where they introduced the dataset and showcased its capabilities in person.

To be fair, the concept of embeddings isn’t new. Even in remote sensing, several research groups have developed their own embeddings from satellite data to better represent Earth’s surface. But what makes Google’s version stand out is its sheer scale and global coverage. It’s the first dataset (to my knowledge) that captures such a broad and consistent representation of the planet at once.

There are already plenty of great technical explanations about how satellite embeddings are produced (their white paper is the main source of good information, of course), so I won’t repeat them here. To put it simply: I like to think of embeddings as “PCA on steroids.” They transform data into a new (usually lower-dimensional) space that preserves essential information while making it easier for machines to process. The tradeoff is that each dimension becomes more abstract. It is harder for us mortals to interpret, but far more powerful for computers to use. By the way, why does it sounds like we are sacrificing knowledge for power?

Anway, recently I’ve been working on a rather ambitious project which involves in building an annual, nationwide land use/land cover (LULC) model for Indonesia. The idea is simple: users can draw a boundary anywhere in Indonesia, and the system will automatically generate a land cover map for that area, historically (from 2017) up to the latest available year. Ready for further analysis.

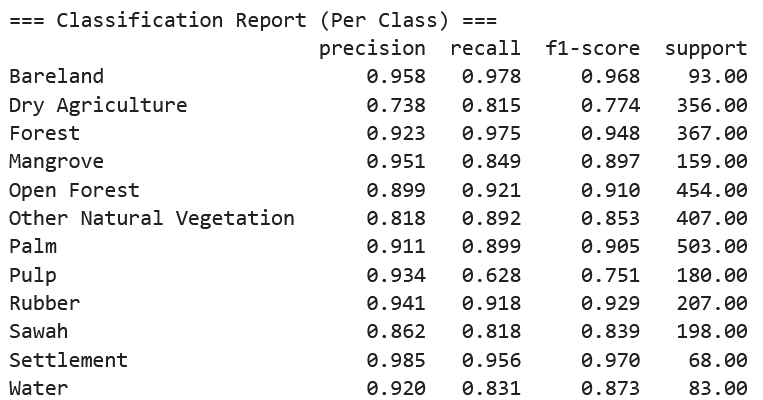

I aim for detailed classification, covering major agricultural commodities such as palm oil, acacia, coffee, and rubber, along with different forest types and other distinctive land covers.

Before embeddings were available, I had built several LULC models (like the one I wrote about here) but they were mostly localized due to resource constraints constraints.

Now, with satellite embeddings, and of course along with other advances like open labeled datasets, multi-sensor imagery, and Google Earth Engine, building large-scale models has become much more achievable for small organizations or individual researchers like myself. Embeddings remove most of the heavy feature engineering and feature selection work, while also encoding spatial context (neighborhood relationships) and temporal patterns that capture vegetation phenology. These make simpler pixel based machine learning methdod on top of it become so much better and more powerful.

Below is an example of my model in action, along with its accuracy metrics:

Looking Ahead

What’s next for Earth observation? I believe this is only the beginning. Soon, we’ll likely see open embeddings with shorter temporal spans (maybe monthly?) and even embeddings incorporating richer data input such as hyperspectral data for example.

As a remote sensing scientist, this kind of progress excites me. It feels like we’re entering a new era where powerful global datasets allow small teams or even individuals to tackle problems that once required huge resources.

Enjoy Reading This Article?

Here are some more articles you might like to read next: